Pipelines is now streaming in beta

Today, Alchemy is announcing Pipelines, the easiest way to stream real-time blockchain data into your infrastructure. If you’re interested, sign-up for beta access here.

Problem: Blockchain data pipelines are slow and expensive

Developers building on top of blockchain data currently have a few options:

For engineers with application-specific needs, ingesting data from node APIs gives you control over how you want to process and join data.

However, building and maintaining a data pipeline can take weeks of engineering time and expertise. You have to set up a performant backfill, a reliable ingestion service, and a mechanism for dealing with reorgs. On top of all of that, you have to check for data completeness. All of this is time wasted that could be spent on building product features.

Solution: Pipelines blockchain data streaming

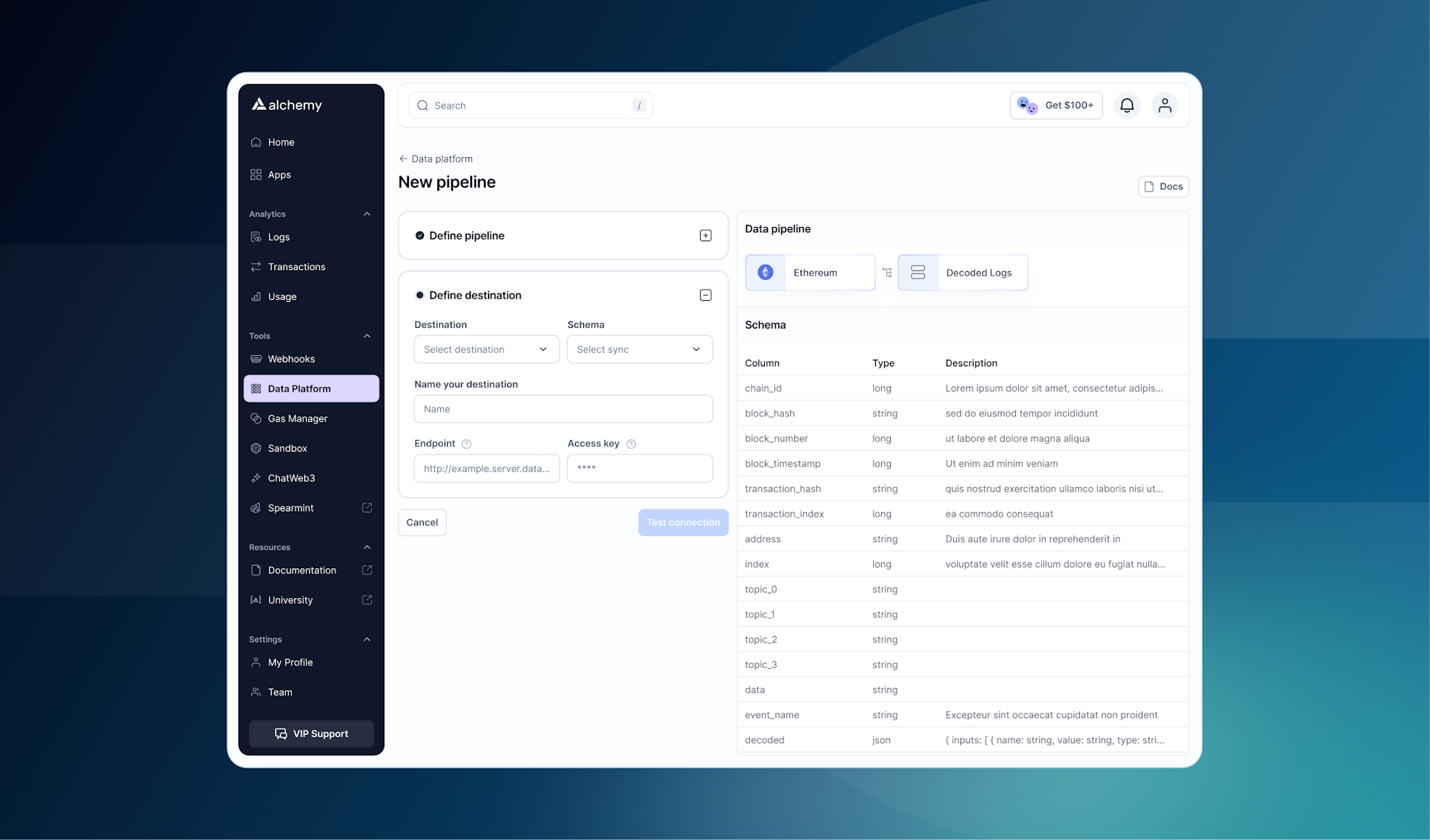

Alchemy Pipelines solves all of this with a point-and-click interface for creating data pipelines.

Simply configure a data source (e.g. decoded logs) and a destination (e.g. Postgres), and data will start flowing through instantly — all in less than 5 minutes.

Out of the box, Pipelines comes with the following features:

Easy-to-use interface: Set up your first pipeline in < 5 minutes using our intuitive web UI.

Fast historical backfills: Write data straight to your destination of choice 100x faster than with JSON RPCs.

Real-time ingestion of incoming data: Gain peace of mind with reliable data ingestion within seconds of it being published on-chain.

Automatic re-org handling: Automatically handle chain re-orgs with out-of-the-box data retraction.

ABI decoding: Save time by using our built-in log decoding.

Web3 data streaming use cases

If you’re ingesting a significant amount of data via Webhooks or eth_getLogs, Pipelines could save you both time and money over your in-house system. Here are a few ways our beta customers are using Pipelines:

Aave token transfers

User was looking to ingest all transfers of the Aave token. They set up a Pipeline in under 3 minutes, and the data (over 1.3M transactions) streamed into his database within 10 minutes.

NFT collection events

Customer previously used an in-house eth_getLogs polling system to ingest events from their NFT collection, but was struggling with maintenance time and reorgs. Pipelines helped them set up a new ingestion system in less than 5 minutes.

Roadmap

If you’re interested in trying Pipelines, sign up for our beta waitlist!

The team is iterating quickly based off of user feedback. Interested in a custom setup or looking for a specific data source, chain, or destination? Email the Pipelines team.

Related articles

Advancing Into The Onchain Consensus Layer with Alchemy Validators

Announcing Alchemy Validators, the newest addition to our comprehensive web3 infrastructure suite

Announcing our integration with Celo, the faster, more secure, and more composable Layer 2

Account Kit Now Supports EIP-7702: Upgrade EOAs to Smart Accounts

Account Kit now supports EIP-7702, letting you upgrade EOAs to smart accounts with zero migration.